Mendive. Journal on Educación, october-december, 2022; 20(4):1160-1175

Translated from the original in Spanish

Original article

Indicators to evaluate the impact of the post-COVID recovery strategy. University of Pinar del Río "Hermanos Saíz Montes de Oca"

Indicadores para evaluar el impacto de estrategia de recuperación posCOVID. Universidad de Pinar del Río "Hermanos Saíz Montes de Oca"

Indicadores para avaliar o impacto da estratégia de recuperação pós-COVID. Universidade de Pinar del Río "Hermanos Saíz Montes de Oca"

Benito Bravo Echevarría1![]() http://orcid.org/0000-0002-1395-1855

http://orcid.org/0000-0002-1395-1855

Carlos Luis Fernández Peña1![]() http://orcid.org/0000-0001-6833-0055

http://orcid.org/0000-0001-6833-0055

1University of Pinar del Río Hermanos Saíz Montes de Oca. Cuba. ![]() benito.bravo@upr.edu.cu; carlosl.fernandez@upr.edu.cu

benito.bravo@upr.edu.cu; carlosl.fernandez@upr.edu.cu

Received: May 04th, 2022.

Accepted: July 01st, 2022.

ABSTRACT

The COVID-19 pandemic, since March 2020, has had a strong influence on the Cuban educational system in general and on the Higher Education subsystem, in particular. At the University of Pinar del Río "Hermanos Saíz Montes de Oca" important transformations took place in all its substantive processes, which raised the need to draw up a post-COVID recovery strategy. The purpose of this work is to validate the indicators designed to evaluate the impact of said strategy. For the validation, from the empirical point of view, experts were consulted, for which a sample of 32 experts was taken, to whom an initial survey was applied, selected by calculating the Expert Competence Coefficient. Their answers were analyzed through the Content Validity Coefficient of Hernández -Nieto (2002), through which it was possible to establish the degree of agreement and validity of the indicators among the experts. After the analysis of the experts, an "acceptable" Content Validity Coefficient was obtained for all the indicators. According to the data obtained, it is shown that all the indicators are valid to evaluate the impact of the designed post-COVID strategy.

Keywords: Expert Competence Coefficient; Content Validity Coefficient; Impact evaluation; impact; indicators.

RESUMEN

La pandemia de la COVID-19, desde marzo de 2020, produjo una fuerte influencia en el sistema educativo cubano en general y en el subsistema de la Educación Superior, en particular. En la Universidad de Pinar del Río "Hermanos Saíz Montes de Oca" se produjeron importantes transformaciones en todos sus procesos sustantivos, lo cual planteó la necesidad de trazar una estrategia de recuperación posCOVID. El propósito del presente trabajo es el de validar los indicadores diseñados para evaluar el impacto de dicha estrategia. Para la validación, desde el punto de vista empírico, se trabajó con la consulta a expertos, para lo cual se tomó una muestra de 32 expertos a los que se les aplicó una encuesta inicial, seleccionados mediante el cálculo del Coeficiente de Competencia experta. Sus respuestas fueron analizadas a través del Coeficiente de Validez de Contenido de Hernández-Nieto (2002), por medio del cual fue posible establecer el grado de concordancia y validez de los indicadores entre los expertos. Luego del análisis de los expertos, se obtuvo un Coeficiente de Validez de Contenido "aceptable" para todos los indicadores. Según los datos obtenidos, se demuestra que todos los indicadores resultan válidos para evaluar el impacto de la estrategia posCOVID diseñada.

Palabras clave: Coeficiente de Competencia Experta; Coeficiente de Validez de Contenido; evaluación de impacto; impacto; indicadores.

RESUMO

A pandemia de COVID-19, desde março de 2020, teve uma forte influência no sistema educacional cubano em geral e no subsistema de ensino superior, em particular. Na Universidade "Hermanos Saíz Montes de Oca" de Pinar del Río, ocorreram importantes transformações em todos os seus processos substantivos, o que levantou a necessidade de traçar uma estratégia de recuperação pós-COVID. O objetivo deste trabalho é validar os indicadores desenhados para avaliar o impacto dessa estratégia. Para a validação, do ponto de vista empírico, foram consultados especialistas, para os quais foi retirada uma amostra de 32 especialistas, aos quais foi aplicado um questionário inicial, selecionado pelo cálculo do Coeficiente de Competência do Especialista. Suas respostas foram analisadas por meio do Coeficiente de Validade de Conteúdo de Hernández-Nieto (2002), por meio do qual foi possível estabelecer o grau de concordância e validade dos indicadores entre os especialistas. Após a análise dos especialistas, obteve-se um Coeficiente de Validade de Conteúdo "aceitável" para todos os indicadores. De acordo com os dados obtidos, mostra-se que todos os indicadores são válidos para avaliar o impacto da estratégia pós-COVID desenhada.

Palavras-chave: Coeficiente de Competência do Especialista; Coeficiente de Validade de Conteúdo; Avaliação de impacto; impacto; indicadores.

INTRODUCTION

Important transformations in the substantive university processes caused the COVID-19 pandemic, fundamentally in the educational teaching process. Situation that led to the total closure of face-to-face activities in our Higher Education institutions and to confer a leading role to online activities and distance work in order to continue the training of professionals.

For these reasons, at the request of the management of the University of Pinar del Río "Hermanos Saíz Montes de Oca", the Center for the Study of Educational Sciences of Pinar del Río (CECE-PRI) developed a post-COVID recovery strategy, to give continuity to the training of professionals; this had among its central ideas the promotion of the use of Information and Communications Technologies (ICT) as an alternative to social distancing, which, according to Cuello and Solano (2021) "... make student learning personalized and in little time, (...) making it easier for him to carry out the activities" (p. 40).ICTs expand the possibilities of communication and work between distant people.

Likewise, the active role that the student must play in knowledge management was taken into account, since as Jiménez (2019) indicates:

Knowledge management guides the discovery of the ability to act to produce permanent positive results through a set of activities that are established to be deployed with the aim of using, developing and managing the knowledge of the actors available in the organization (p. 1).

The strategy was based on the principles of flexibility and contextualization, accessibility and equity and the relationship between the University- Municipal University Center- Employment Entities and as premises the training of main professors of the Academic Year, pedagogical leaders, support staff and recognizes three connectivity scenarios.

Faced with this reality, in 2020 a team of CECE-PRI researchers and collaborators took on the task of developing the dimensions and indicators to assess the impact of the post-COVID recovery strategy (see Annex A). Before the elaboration and application of the instruments, it was necessary to determine if they were valid and reliable. Solans-Domenech et al. (2019) suggest looking for the most pertinent variant to demonstrate the validity of your research proposal, which will ensure that the information obtained is what you want to obtain.

To demonstrate the validity of the indicators, the Content Validity Coefficient (CVC) method by Hernández-Nieto (2002) was used, which is used to evaluate the judgments of experts, specialists or users and, according to González et al. (2018), "... constitutes a heuristic method of high scientific rigor that allows the search for consensus based on qualitative approaches derived from the experience and knowledge of a group of people" (p. 100).

Before taking this step, it is essential to select the experts who will participate in determining said validity, for which it is necessary to calculate the Expert Competence Coefficient, which according to Cabero and Barroso (2013), "...is made from of the opinion shown by the expert about his level of knowledge about the research problem..." (p. 29).

The judgment of experts is a strategy with wide advantages to demonstrate the validity of the investigation and is especially relevant, since they are the ones who must eliminate the irrelevant items and modify those that require it. Cabero and Llorente (2013) consider it: "...very useful to determine knowledge about content and difficult, complex and novel or little studied topics (...)" (p. 14).

It is necessary to take into account the level of knowledge, handling of information, professional experience, willingness and disposition to participate in the process, availability of time, commitment to intervene in all the planned application rounds and years. of experience in the specific subject. Submitting a comparison instrument to the consultation and judgment of experts must be done on the basis of validity and reliability criteria. In this sense, González et al. (2018) state that "expert consultation constitutes a heuristic method of high scientific rigor that allows the search for consensus based on qualitative approaches derived from the experience and knowledge of a group of people" (p. 100).

For Juárez-Hernández and Tobón (2018), there are three fundamental aspects to consider in expert judgment: the concept of expert, determination of the degree of knowledge in the area or construct, and the number of experts necessary to carry out the evaluation of the instrument. Meanwhile, Quezada et al. (2020) state that it allows the assessment of information collection and analysis instruments, methodologies used, teaching materials, opinion regarding a specific aspect, conclusive assessments of a problem or its solutions, among others.

But what is an expert? It must be an individual or person capable of providing reliable assessments on a problem in question, with knowledge and experience in the subject, professional experience and years of experience in said subject and accumulated sufficient knowledge on the subject under consideration. For Garcia et al. (2020), an expert must have broad and deep knowledge of the activity under analysis and be familiar with the system where the object of study is contextualized.

The quality of the process and its results may be conditioned by the adequate selection of experts (López-Gómez, 2018; Cabero-Almenara et al., 2020), in addition to the number of experts necessary to participate in the process, although there are no uniform criteria among authors. Zartha-Sossa et al. (2017), for example, refer from 9 to 24 experts. For the purposes of this study, we worked with 38 people.

The objective of this paper is to present the results of the validation process of the indicators designed to evaluate the impact of the post-COVID recovery strategy.

MATERIALS AND METHODS

From a statistical point of view, for the validation of the indicators to evaluate the impact of the post-COVID recovery strategy, the calculation of the Content Validity Coefficient (CVC) by Hernández-Nieto (2002) was used, which was used to evaluate the judgment of the selected experts, assessing the degree of agreement by items of each one of them. Although Hernández-Nieto (2002) recommends the participation of between three and five experts regarding each of the different items and the instrument in general, they were selected in this study 32.

The procedure followed to guarantee the quality of the selection of the experts in this research was based on the calculation of the Coefficient of Expert Competence, so that with their opinion and self-assessment they indicated the degree of knowledge they had about the object of investigation. In their selection, the following criteria were taken into account: professor with extensive knowledge and experience in this particular area of knowledge, teaching category of Assistant, Auxiliary or Head, with a minimum Master's degree and at least 15 years of experience in Higher Education.

The requirements described were measured in an initial survey sent by email to the 32 who were considered to meet those criteria. Previously, a study of the teaching and research work of 38 candidates had been carried out. The willingness of potential experts to participate in the study was also taken into account, to whom the objectives to be achieved with the research were explained.

Characterization of the Experts

Teaching category: 11 Heads (34.4%), 17 Assistants (53.1%) and 4 Assistants (12.5%).

Academic degree: 21 MSc (65.6%).

Scientific degree: 11 Dr. C. (34.4%).

Years of experience in Higher Education (average): 16 years average.

Experience in the area of knowledge: 100%.

Subsequently, the Coefficient of competence of the possible experts (K) was evaluated, based on the formula K= (Kc+Ka)/2 where Kc is the knowledge coefficient and Ka the argumentation coefficient. To determine the Kc, they were asked to mark with a cross on an increasing scale from 1 to 10, which was multiplied by 0.1. The value corresponds to the degree of knowledge or general information they have about the subject of study, following the structure of table 1, where the answer given by each expert appears. Based on these results, the knowledge coefficient Kc was calculated, as shown in Table 2.

Subsequently, each expert was instructed to carry out a self-assessment of their levels of argumentation or rationale, as illustrated in Table 3; that is, the degree of influence that each source had on the knowledge they have about the proposed topic, also marking with an XHigh, Medium, and Low options for each option.

Next, the argumentation coefficient (Ka) was calculated, taking into account the pattern defined in table 4. In this sense, an adaptation of the table proposed by Dobrov and Smirnov (1972) was made. This calculation was made on the basis of the options indicated by the experts according to their self-assessment of the sources of argumentation in Table 3 and confronted with the pattern in Table 4, as follows: Expert No. 1: Ka = 0.2 + 0.5 + 0.05 + 0.05 + 0.05 + 0.05 = 0.90.

With the final values obtained, the experts were classified into three groups:

1) If K is greater than 0.8, greater than or less than or equal to 1 (high influence);

2) If K is greater than or equal to 0.7, less than or equal to 0.8 (medium influence);

3) If K is greater than or equal to 0.5, greater than or less than or equal to 0.7 (low influence).

The interpretation was carried out by evaluating the indicators on the basis that those with a CVC greater than 0.80 are those that allow the impact of the post-COVID recovery strategy to be evaluated with greater reliability, although those indicators with a CVC have also been considered acceptable. greater than 0.70, this being considered the critical value for acceptance of the indicator (see table 5).

Next, the Content Validity Coefficient of Hernández-Nieto (2002) was calculated to assess the degree of agreement of the experts by applying a Likert-type numerical scale of 11 alternatives.

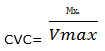

The calculation of the RVC was carried out using the following formula:  where Mx represents the mean of the score given by the experts to each of the indicators and Vmax

the maximum score that the indicator could achieve.

where Mx represents the mean of the score given by the experts to each of the indicators and Vmax

the maximum score that the indicator could achieve.

Next, the error assigned to each indicator (Pei) was calculated, thus reducing the possible bias introduced by one of the judges, j being the number of participating experts.

![]()

Finally, the final CVC was calculated by applying the formula CVC = CVCi Pei.

RESULTS

The following table shows the results of the answers given by each of the experts, in relation to the degree of knowledge or general information they have on the subject of study.

Table 1- Results of the answers given by each expert

Experts |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

1 |

|

|

|

|

|

|

|

x |

|

|

2 |

|

|

|

|

|

|

|

|

x |

|

3 |

|

|

|

|

|

|

|

x |

|

|

4 |

|

|

|

|

|

|

|

|

|

x |

5 |

|

|

|

|

|

|

|

x |

|

|

6 |

|

|

|

|

|

|

|

|

x |

|

7 |

|

|

|

|

|

|

|

|

x |

|

8 |

|

|

|

|

|

|

|

|

x |

|

9 |

|

|

|

|

|

|

|

x |

|

|

10 |

|

|

|

|

|

|

|

x |

|

|

11 |

|

|

|

|

|

|

|

x |

|

|

12 |

|

|

|

|

|

|

|

x |

|

|

13 |

|

|

|

|

|

|

|

|

|

x |

14 |

|

|

|

|

|

|

|

|

x |

|

15 |

|

|

|

|

|

|

|

|

x |

|

16 |

|

|

|

|

|

|

|

x |

|

|

17 |

|

|

|

|

|

|

|

x |

|

|

18 |

|

|

|

|

|

|

|

|

x |

|

19 |

|

|

|

|

|

|

|

x |

|

|

20 |

|

|

|

|

|

|

|

|

x |

|

21 |

|

|

|

|

|

|

|

|

|

x |

22 |

|

|

|

|

|

|

|

|

x |

|

23 |

|

|

|

|

|

|

|

|

x |

|

24 |

|

|

|

|

|

|

|

|

|

x |

25 |

|

|

|

|

|

|

|

|

|

x |

26 |

|

|

|

|

|

|

|

x |

|

|

27 |

|

|

|

|

|

|

|

|

x |

|

28 |

|

|

|

|

|

|

|

|

|

x |

29 |

|

|

|

|

|

|

|

x |

|

|

30 |

|

|

|

|

|

|

|

x |

|

|

31 |

|

|

|

|

|

|

|

|

x |

|

32 |

|

|

|

|

|

|

|

|

x |

|

Based on the above results, the knowledge coefficient Kc was calculated and is shown in Table 2.

Table 2- Results of the calculation of the Knowledge Coefficient (Kc)

Expert |

k c |

Expert |

k c |

Expert |

k c |

1 |

0.8 |

12 |

0.8 |

23 |

0.9 |

2 |

0.9 |

13 |

1 |

24 |

1 |

3 |

0.8 |

14 |

0.9 |

25 |

1 |

4 |

1 |

15 |

0.9 |

26 |

0.8 |

5 |

0.8 |

16 |

0.8 |

27 |

0.9 |

6 |

0.9 |

17 |

0.8 |

28 |

1 |

7 |

0.9 |

18 |

0.9 |

29 |

0.8 |

8 |

0.9 |

19 |

0.8 |

30 |

0.8 |

9 |

0.8 |

20 |

0.9 |

31 |

0.9 |

10 |

0.8 |

21 |

1 |

32 |

0.9 |

11 |

0.8 |

22 |

0.9 |

|

|

Table 3 illustrates the results of the self-assessment carried out by one of the experts regarding their levels of argumentation or justification; that is, the degree of influence that each source had on the knowledge they have of the proposed topic.

Table 3- Example of theassessment of the sources of theoretical argumentation of the expert 1

sources of argument |

Degree of influence of sources |

||

Tall |

Medium |

Bass |

|

Theoretical analyzes carried out by you on the subject in question |

|

x |

|

Experience gained |

x |

|

|

Works of national authors |

x |

|

|

Works of foreign authors |

x |

|

|

Your knowledge about the state of the subject abroad |

|

x |

|

your intuition |

|

x |

|

The results of the calculation of the argumentation coefficient (Ka) are shown below:

Table 4- Pattern of factors for the calculation of the Coefficient of argumentation (Ka). The results of expert 1 are highlighted

sources of argument |

Degree of influence of sources |

||

Tall |

Medium |

Bass |

|

Theoretical analyzes carried out by you on the subject in question |

0.3 |

0.2 |

0.1 |

Experience gained |

0.5 |

0.4 |

0.2 |

Works of national authors |

0.05 |

0.05 |

0.05 |

Works of foreign authors |

0.05 |

0.05 |

0.05 |

Your knowledge about the state of the subject abroad |

0.05 |

0.05 |

0.05 |

your intuition |

0.05 |

0.05 |

0.05 |

Even when Hernández-Nieto (2002) establishes as a criterion that the items that obtain a CVC greater than 0.80 are those that allow the instrument to measure the defined construct to a greater extent, they established as measurement criteria that those items with a CVC greater than 0.80 are those that allow the impact of the post-COVID recovery strategy to be evaluated with greater reliability, although those indicators with a CVC greater than 0.70 were also considered acceptable, this being the critical value of acceptance of the indicator ( see table 5).

Table 5- Scale of interpretation of the Content Validity Coefficient (CVC) obtained in each of the indicators

CVC (Content Validity Coefficient) |

Interpretation |

Less than 0.60 |

unacceptable validity |

Equal to or greater than 0.60 and less than 0.70 |

Poor validity |

Equal to or greater than 0.70 and less than 0.80 |

Acceptable validity |

Greater than or equal to 0.80 and less than 0.90 |

good validity |

Equal to or greater than 0.90 |

excellent validity |

Based on the established criteria, they are not considered unacceptable or deficient indicators, nor are they considered excellent indicators. All the indicators were between the acceptable and good categories, with values higher than the critical inclusion value of 0.70 and corresponding to the "acceptable" evaluation consensus.

Analysis by dimensions and indicators

CVC_for himvalidity criterion for Dimension I "Reduction of epidemiological risks" it ranged between 0.75313 and 0.79375, which can be considered as having acceptable validity in all its indicators (table 6)

Table 6- Dimension I "Reduction of epidemiological risks"

Indicator |

Validity |

|

I1 |

0.79375 |

0.8 |

I2 |

0.77500 |

0.8 |

I3 |

0.77500 |

0.8 |

I4 |

0.79375 |

0.8 |

I5 |

0.75313 |

0.8 |

I6 |

0.76250 |

0.8 |

For Dimension II "Impact indicators of the teaching strategy", the CVC for the Criterion validity ranged between 0.74063 and 0.80313. All the indicators reached a CVC greater than 0.7, with the indicator "Effectiveness of the training program for academic leaders at different levels" having the highest CVC with good validity and the indicator "Effectiveness in determining the methods of teaching-learning in the conditions of a prolonged crisis scenario", the one with the lowest CVC (table 7)

Table 7- Dimension II "Impact indicators of the teaching strategy"

Indicator |

Validity |

|

II1 |

0.80313 |

0.8 |

II2 |

0.76563 |

0.8 |

II3 |

0.75625 |

0.8 |

II4 |

0.79063 |

0.8 |

II5 |

0.76250 |

0.8 |

II6 |

0.76875 |

0.8 |

II7 |

0.74063 |

0.7 |

II8 |

0.75313 |

0.8 |

II9 |

0.79063 |

0.8 |

II10 |

0.77500 |

0.8 |

In the case of dimension III " Indicators on the impact of the link with labor entities for the enhancement of the training process", it obtained a CVC for the validity criterion between 0.72500 and 0.78750; that is, an acceptable validity, although two of the indicators: " Effectiveness of the training actions developed in the work environment", the lowest of all, and "Effectiveness of the changes that occur in the employing entities...", present values by below average (table 8).

Table 8- Dimension III " Impact of the link with labor entities for the enhancement of the training process"

Indicator |

Validity |

|

III1 |

0.78750 |

0.8 |

III2 |

0.77188 |

0.8 |

III3 |

0.75938 |

0.8 |

III4 |

0.78438 |

0.8 |

III5 |

0.72500 |

0.7 |

III6 |

0.75625 |

0.8 |

III7 |

0.74375 |

0.7 |

The CVC for the validity criterion of Dimension IV "Indicators on the achievement of the essentiality of the contents in the curricular adaptation", ranged between 0.74688 and 0.79063, considered acceptable, where the indicator" Effectiveness of the process of identifying strengths and opportunities offered by the context to design recovery strategies", with a CVC for the validity criterion of 0.74688 (table 9).

Table 9- Dimension IV " Achievement of the essentiality of the contents in the curricular adaptation"

Indicator |

Validity |

|

IV1 |

0.80313 |

0.8 |

IV2 |

0.80000 |

0.8 |

IV3 |

0.76250 |

0.8 |

IV4 |

0.79688 |

0.8 |

IV5 |

0.74063 |

0.7 |

IV6 |

0.77813 |

0.8 |

IV7 |

0.75000 |

0.8 |

In Dimension V "Impact indicators achieved by the flexibility of curricular adjustments", all the indicators present a CVC for the validity criterion greater than 0.74688, considered to be of acceptable validity. (Table 10).

Table 10 - Dimension V "Impacts achieved by the flexibility of curricular adjustments"

Indicator |

Validity |

|

V1 |

0.78750 |

0.8 |

V2 |

0.78125 |

0.8 |

V3 |

0.74688 |

0.7 |

V4 |

0.79063 |

0.8 |

In Dimension VI " Effectiveness of the training actions carried out at each stage", all the indicators have a CVC for the validity criterion of acceptable, only two: "Effectiveness of the adaptations of the learning activities system" and " Effectiveness of the actions to guarantee continuity in the following course" are below the average (table 11).

Table 11 - Dimension VI "Effectiveness of the training actions carried out at each stage"

Indicator |

Validity |

|

VI1 |

0.76875 |

0.8 |

VI2 |

0.79063 |

0.8 |

VI3 |

0.76563 |

0.8 |

VI4 |

0.78750 |

0.8 |

VI5 |

0.73438 |

0.7 |

VI6 |

0.79063 |

0.8 |

VI7 |

0.74063 |

0.7 |

VI8 |

0.77500 |

0.8 |

VI9 |

0.80625 |

0.8 |

And for Dimension VII "Indicators of satisfaction of the participants in the training process", all the indicators exceed the CVC for the validity criterion of acceptable, although two: "Satisfaction of the teachers with the flexibility achieved with the curricular adjustments" and "Satisfaction of the students' families with the training process during the recovery stage", they present a notable affectation with respect to the others (table 12).

Table 12- Dimension VII " Satisfaction of the participants in the training process"

Indicator |

Validity |

|

VII1 |

0.76250 |

0.8 |

VII2 |

0.77813 |

0.8 |

VII3 |

0.75313 |

0.8 |

VII4 |

0.80938 |

0.8 |

VII5 |

0.72813 |

0.7 |

VII6 |

0.75000 |

0.8 |

VII7 |

0.70938 |

0.7 |

DISCUSSION

According to Hernández and Robaina (2017): "For the selection of experts, different routes can be considered according to the type of study defined by the researcher. In addition, it can be adapted according to the needs or comforts of those who execute the verification" (p. 5).

Thus, the calculation of the Expert Competence Coefficient was carried out so that the experts, As Robles and Rojas (2015) reveal, "(...) with their opinion and self-assessment indicate the degree of knowledge about the object of investigation, as well as the sources that allow them to argue and justify said level" (p. 2). This technique allowed us to adequately discriminate the selection of experts, based on the self-assessment they made regarding the knowledge they have on the subject. All the experts considered having high levels of argumentation in this regard. The use of this technique was effective "(...) showing high levels of efficacy" (Cabero and Llorente, 2013, p. 12).

In this sense, knowing the opinion of the experts selected to self-assess and indicate the degree of knowledge they had about the object of investigation, guaranteed the quality of their selection, taking into account criteria such as being a teacher with extensive knowledge and experience in this particular area of knowledge, with a teaching category of Assistant or higher, with a minimum Master's degree and with at least 15 years of experience in Higher Education, which allowed testing the degree of cohesion of the criteria issued by them and showed that the opinions issued by the experts in relation to the proposed network design are consistent.

The predominant teaching category of the experts who participated in the study was Assistant (53.1%), while the majority had the academic title of Master (65.6%), 34.4% are Doctors of Science, the average number of years of experience in Higher Education is 16 and 100% have experience in the area of knowledge.

Based on the Hernández-Nieto (2002) criteria, which establish that the items that obtain a CVC greater than 0.80 are those that allow the instrument to measure the defined construct to a greater extent, those indicators with a higher CVC are considered acceptable. at 0.70, this being the critical value of acceptance of the indicator; Unacceptable or poor indicators were not considered, although they were not considered excellent indicators either, since all were between the acceptable and good categories.

The Content Validity Coefficient of Hernández -Nieto (2002) made it possible to quantitatively evaluate the Content Validity, using the Expert Judgment Technique, by showing a degree of concordance and significant validity of the 32 judges who participated in the evaluation of the indicators. and the dimensions to assess the impact of the post-COVID recovery strategy of the University of Pinar del Río "Hermanos Saíz Montes de Oca".

It was found that all the indicators developed have sufficient validity and reliability, being above the minimum values, which adequately supports the internal consistency and validity of the indicators. The proposed system of indicators was enriched with the opinions of the participants. For all of the above, the system of indicators to assess the impact of the post-COVID recovery strategy of the University of Pinar del Río "Hermanos Saíz Montes de Oca" can be considered validated.

In conclusion, and according to the results obtained, a valid and reliable tool is presented, which will allow evaluating the impact of the post-COVID recovery strategy. University of Pinar del Río "Hermanos Saíz Montes de Oca".

In relation to expert judgment, the selection of experts, as well as its qualitative and quantitative approach, are considered highly relevant elements for the evaluation and validation of an instrument (Juárez-Hernández and Tobón, 2018). Due to the above, experts with experience, sufficient academic and scientific qualification and extensive knowledge and experience in this particular area of knowledge were sought.

BIBLIOGRAPHIC REFERENCES

Cabero-Almenara, J., & Barroso-Osuna, J. (2013) "La utilización del juicio de experto para la evaluación de TIC: el coeficiente de competencia experta", Bordón. Revista de Pedagogía, 65(2), pp. 25-38, Recuperado de: https://idus.us.es/xmlui/handle/11441/24562

Cabero-Almenara, J. y Llorente-Cejudo, M.C. (2013). La aplicación del juicio de experto como técnica de evaluación de las tecnologías de la información (TIC). Revista de Tecnología de Información y Comunicación En Educación, 7(2), 1122. https://www.researchgate.net/profile/Julio_Ponce2/publication/272686564_Reingeniería_de_una_Ontología_de_Estilos_de_Aprendizaje_para_la_Creación_de_Objetos_de_Aprendizaje/links/5643a8a308ae9f9c13e05f3a.pdf# page=13

Dobrov, G. M., & Smirnov, L. P. (1972) "Forecasting as a means for scientific and technological policy control", Technological Forecasting and Social Change, 4(1), pp. 5-18. http://dx.doi.org/10.1016/0040-1625(72)90043-1

García, N., Carreño, A., y Doumet, N. (2020). Validación del Modelo de Gestión Sostenible para el Desarrollo Turístico en Vinculación Universidad - Comunidades Manabitas. Ecuador. Investigación y Negocios, 13(21), https://doi:10.38147/inv&neg.v13i21.82

Hernández-Nieto, R. A. (2002). Contribuciones al análisis estadístico Facultad de Ciencias Jurídicas y Políticas, Universidad de Los Andes. Instituto de Estudios en Informática (IESINFO), Mérida, 2002. 119 p.

Hernández, F. y Robaina, J. I. (2017). Guía para la utilización de la metodología Delphi en las etapas de comprobación de productos terminados tipo software educativo. Revista 16 de Abril, 56(263):26-31. http://www.rev16deabril. sld.cu/index.php/16_04/article/view/429/220

Jiménez Chaves, V. E. (2019). Gestión del conocimiento en la educación a distancia. Ciencia Latina. Revista multidisciplinar, 3(1). 156-165. https://ciencialatina.org/index.php/cienciala/article/view/16/9

Juárez-Hernández, L. G. y Tobón, S. (2018). Análisis de los elementos implícitos en la validación de contenido de un instrumento de investigación. Revista Espacios, 39(53), pp. 23-30.

López-Gómez, E. (2018) "El método Delphi en la investigación actual en educación: una revisión teórica y metodológica". Educación XX1, 21(1), pp. 17-40. http://dx.doi.org/10.5944/educXX1.15536

Quezada, G., Castro-Arellano, M., Oliva, J., Gallo, C., y Quesada-Castro, M. (2020). Método Delphi como Estrategia Didáctica en la Formación de Semilleros de Investigación. Revista Innova Educación, 2(1), 78-90. https://doi:10.35622/j.rie.2020.01.005.

Robles Garrote, P. y Rojas, M. D. C. (2015). La validación por juicio de expertos: dos investigaciones cualitativas en Lingüística aplicada. Revista Nebrija de Lingüística Aplicada a La Enseñanza De Lenguas, 9(18), pp. 124-139. https://revistas.nebrija.com/revista-linguistica/article/view/259/227

Solans-Domenech, M., Pons, J. M. V., Adam, P., Grau, J. y Aymerich, M. (2019). Development and Validation of a Questionnaire to Measure Research Impact, Research Evaluation, 28(3), 253-262. https://doi:10.1093/reseval/rvz007

Zartha-Sossa, J. W., Montes-Hincapié, J. M., Toro-Jaramillo, I. D., Hernández-Zarta, R., Villada-Castillo, H. S., & Hoyos-Concha, J. L. (2017). "Método Delphi en estudios de prospectiva tecnológica: una aproximación para calcular el número de expertos y aplicación del coeficiente de competencia experta k", Biotecnología en el Sector Agropecuario y Agroindustrial, 15(1), pp. 105-115. http://dx.doi.org/10.18684/BSAA(15)105-115

Annex A - Dimensions and indicators to evaluate the impact of the post-COVID recovery strategy of the UPR.

Dimension I. Reduction of epidemiological risks

Indicators

Dimension II. Impact indicators of the teaching strategy

Dimension V. Impact indicators achieved by the flexibility of curricular adaptations

Dimension VI. Effectiveness of the training actions carried out at each stage

Conflict of interest:

Authors declare not to have any conflicts of interest.

Authors´ Contribution:

The authors have participated in the writing of the work and analysis of the documents.

![]()

This work is under a licencia de Creative Commons Reconocimiento-NoComercial 4.0 Internacional

Copyright (c) Benito Bravo Echevarría, Carlos Luis Fernández Peña